Longde Huang

PhD student

About me

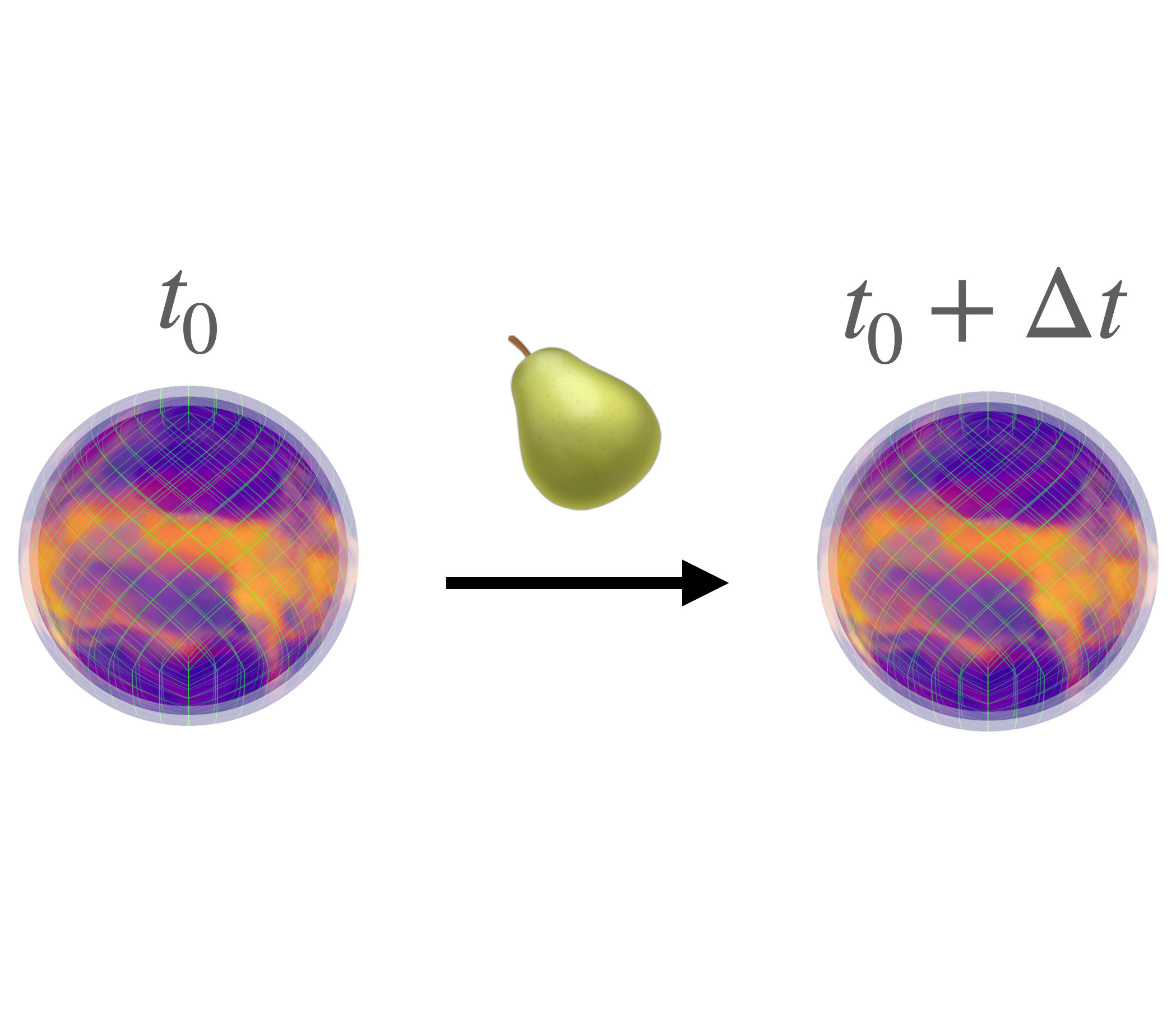

My current research focuses on the theoretical sides of equivariant neural networks, in particular on the trade-off between enforcing symmetry through data augmentation and through manifestly equivariant architectures. More concretely, I view the training dynamic as a stochastic process, and aim to understand the asymptotic symmetry properties emerging from random sampling and parameter initialization.

My background prior to this is in mathematics and topology, which has motivated me to work on gauge-equivariant neural networks for predicting topological invariants of multiband insulators, and studying the symmetry derived from mathematics and physics. These experiences continue to shape my current research, where I have reoriented my self to explore more general and abstract structures underneath, and develop a theoretical framework for equivariance of neural networks.

My research is supported by the Wallenberg AI, Autonomous Systems and Software Program (WASP).

Education

MSc in Engineering Mathematics and Computational Science at Chalmers University of Technology, Sweden (2023)

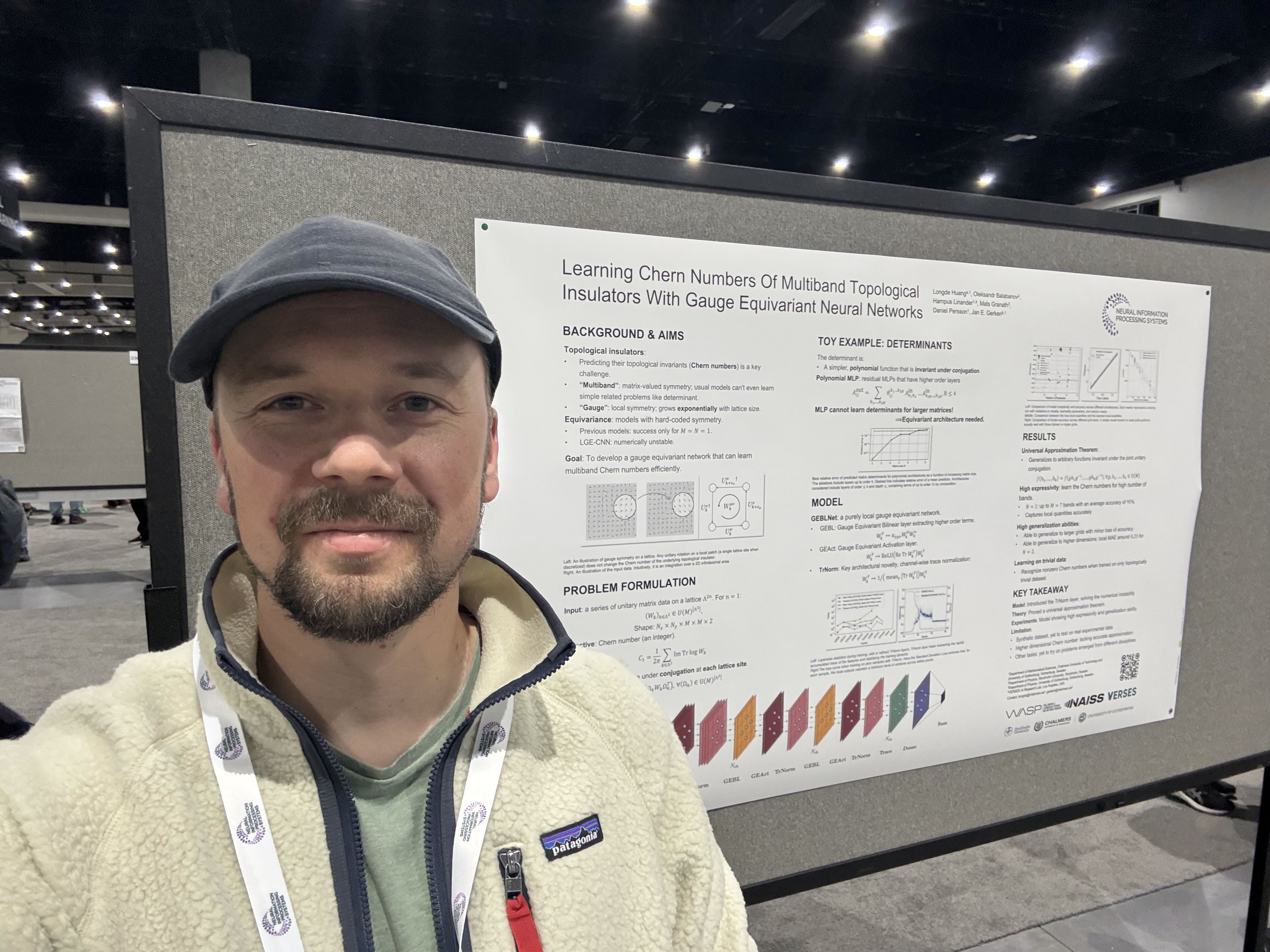

Thesis: Learning Chern Numbers of Topological Insulators with Gauge Equivariant Neural Networks

Supervised by: Prof. Jan Gerken

Publications

Learning Chern Numbers of Topological Insulators with Gauge Equivariant Neural Networks #

Longde Huang, Oleksandr Balabanov, Hampus Linander, Mats Granath, Daniel Persson, Jan E. Gerken

Equivariant network architectures are a well-established tool for predicting invariant or equivariant quantities. However, almost all learning problems considered in this context feature a global symmetry, i.e. each point of the underlying space is transformed with the same group element, as opposed to a local gauge symmetry, where each point is transformed with a different group element, exponentially enlarging the size of the symmetry group. Gauge equivariant networks have so far mainly been applied to problems in quantum chromodynamics. Here, we introduce a novel application domain for gauge-equivariant networks in the theory of topological condensed matter physics. We use gauge equivariant networks to predict topological invariants (Chern numbers) of multiband topological insulators. The gauge symmetry of the network guarantees that the predicted quantity is a topological invariant. We introduce a novel gauge equivariant normalization layer to stabilize the training and prove a universal approximation theorem for our setup. We train on samples with trivial Chern number only but show that our models generalize to samples with non-trivial Chern number. We provide various ablations of our setup. Our code is available at this https URL.