Oscar Carlsson

PhD student 2020-2025

About me

During my years as a PhD student under Daniel Persson I explored many different areas of geometric deep learning. From this I have learnt that I have a strong interest in wherever geometry intersects with machine learning, but specifically when we can utilise symmetries in the task to enhance the models. A special case of this is the field of equivariant neural networks. Although I am in general more interested in the mathematical structures in and behind equivariant networks (see our paper “Geometric deep learning and equivariant neural networks”), this has not stopped me from pursuing multiple more practical problems for equivariance and spherical computer vision (see the papers “Equivariance versus Augmentation for Spherical Images” and “HEAL-SWIN: A Vision Transformer On The Sphere”).

For those interested in mathematical structures for non-linear layers I would recommend my paper “Equivariant non-linear maps for neural networks on homogeneous spaces” co-written with Elias Nyholm, Maurice Wiler, and Daniel Persson. This paper constructs a unifying framework allowing multiple well known layers (both linear and non-linear) to appear as special cases.

Additionally, please read my PhD thesis Geometry and Symmetry in Deep Learning: From Mathematical Foundations to Vision Applications which I successfully defended on the 29th of August 2025.

Currently I have a full time teaching position, limited to one year, at the Department of Mathematical Sciences at Chalmers University of Technology.

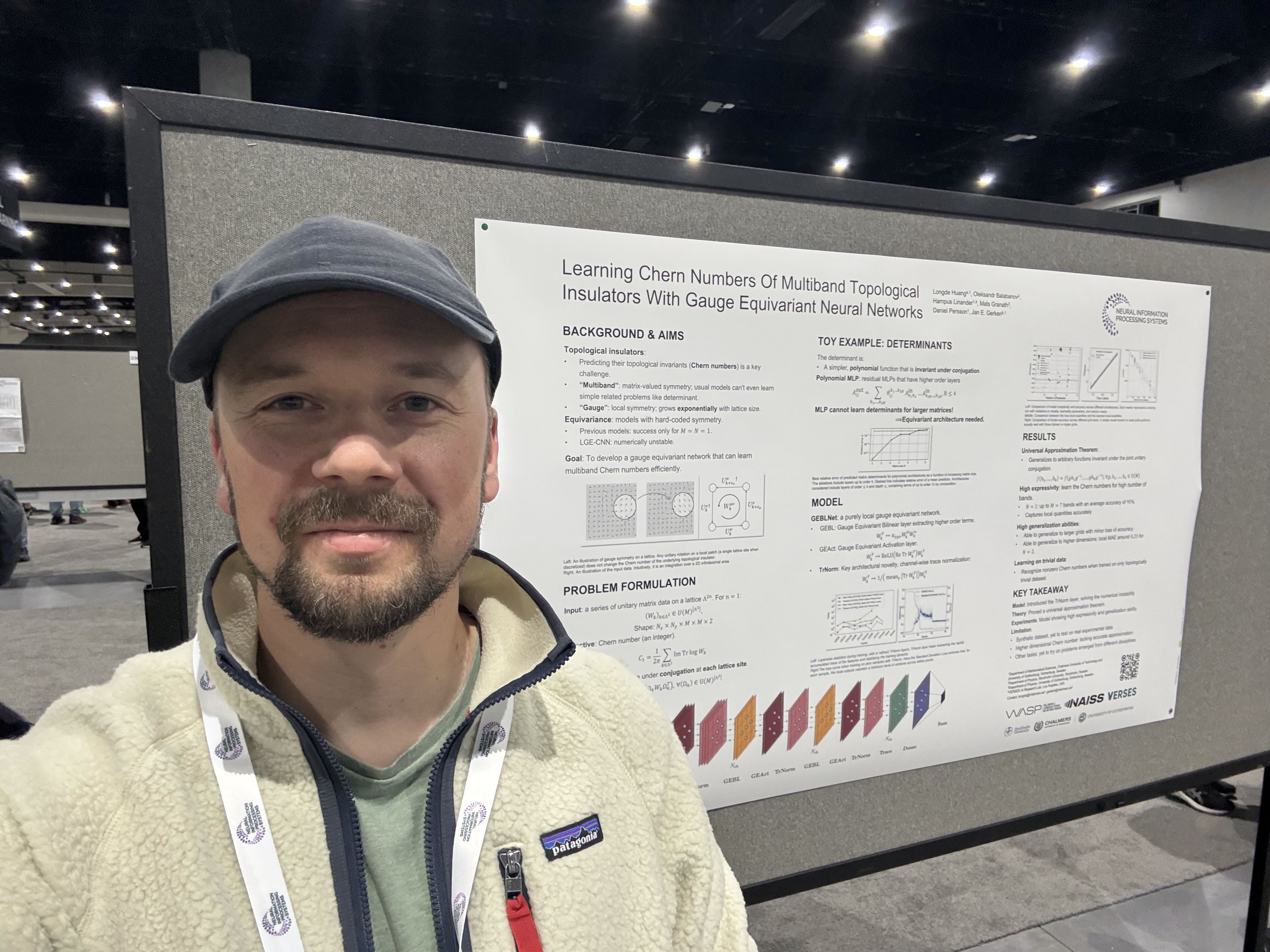

My research was supported by the Wallenberg AI, Autonomous Systems and Software Program (WASP).

Publications

Equivariant non-linear maps for neural networks on homogeneous spaces #

Elias Nyholm, Oscar Carlsson, Maurice Weiler, Daniel Persson

This paper presents a novel framework for non-linear equivariant neural network layers on homogeneous spaces. The seminal work of Cohen et al. on equivariant G-CNNs on homogeneous spaces characterized the representation theory of such layers in the linear setting, finding that they are given by convolutions with kernels satisfying so-called steerability constraints. Motivated by the empirical success of non-linear layers, such as self-attention or input dependent kernels, we set out to generalize these insights to the non-linear setting. We derive generalized steerability constraints that any such layer needs to satisfy and prove the universality of our construction. The insights gained into the symmetry-constrained functional dependence of equivariant operators on feature maps and group elements informs the design of future equivariant neural network layers. We demonstrate how several common equivariant network architectures - G-CNNs, implicit steerable kernel networks, conventional and relative position embedded attention based transformers, and LieTransformers - may be derived from our framework.

Geometric deep learning and equivariant neural networks #

Jan E. Gerken, Jimmy Aronsson, Oscar Carlsson, Hampus Linander, Fredrik Ohlsson, Christoffer Petersson, Daniel Persson

We survey the mathematical foundations of geometric deep learning, focusing on group equivariant and gauge equivariant neural networks. We develop gauge equivariant convolutional neural networks on arbitrary manifolds \(\mathcal{M}\) using principal bundles with structure group K and equivariant maps between sections of associated vector bundles. We also discuss group equivariant neural networks for homogeneous spaces \(\mathcal {M}=G/K\), which are instead equivariant with respect to the global symmetry \(G\) on \(\mathcal {M}\). Group equivariant layers can be interpreted as intertwiners between induced representations of \(G\), and we show their relation to gauge equivariant convolutional layers. We analyze several applications of this formalism, including semantic segmentation and object detection networks. We also discuss the case of spherical networks in great detail, corresponding to the case \(\mathcal {M}=S^2=\textrm{SO}(3)/\textrm{SO}(2)\). Here we emphasize the use of Fourier analysis involving Wigner matrices, spherical harmonics and Clebsch-Gordan coefficients for \(G=\textrm{SO}(3)\), illustrating the power of representation theory for deep learning.

HEAL-SWIN: A Vision Transformer On The Sphere #

Oscar Carlsson, Jan E. Gerken, Hampus Linander, Heiner Spieß, Fredrik Ohlsson, Christoffer Petersson, Daniel Persson

High-resolution wide-angle fisheye images are becoming more and more important for robotics applications such as autonomous driving. However, using ordinary convolutional neural networks or vision transformers on this data is problematic due to projection and distortion losses introduced when projecting to a rectangular grid on the plane. We introduce the HEAL-SWIN transformer, which combines the highly uniform Hierarchical Equal Area iso-Latitude Pixelation (HEALPix) grid used in astrophysics and cosmology with the Hierarchical Shifted-Window (SWIN) transformer to yield an efficient and flexible model capable of training on high-resolution, distortion-free spherical data. In HEAL-SWIN, the nested structure of the HEALPix grid is used to perform the patching and windowing operations of the SWIN transformer, resulting in a one-dimensional representation of the spherical data with minimal computational overhead. We demonstrate the superior performance of our model for semantic segmentation and depth regression tasks on both synthetic and real automotive datasets. Our code is available at https://github.com/JanEGerken/HEAL-SWIN.

Equivariance versus Augmentation for Spherical Images #

Jan E. Gerken, Oscar Carlsson, Hampus Linander, Fredrik Ohlsson, Christoffer Petersson, Daniel Persson

We analyze the role of rotational equivariance in convolutional neural networks (CNNs) applied to spherical images. We compare the performance of the group equivariant networks known as S2CNNs and standard non-equivariant CNNs trained with an increasing amount of data augmentation. The chosen architectures can be considered baseline references for the respective design paradigms. Our models are trained and evaluated on single or multiple items from the MNIST- or FashionMNIST dataset projected onto the sphere. For the task of image classification, which is inherently rotationally invariant, we find that by considerably increasing the amount of data augmentation and the size of the networks, it is possible for the standard CNNs to reach at least the same performance as the equivariant network. In contrast, for the inherently equivariant task of semantic segmentation, the non-equivariant networks are consistently outperformed by the equivariant networks with significantly fewer parameters. We also analyze and compare the inference latency and training times of the different networks, enabling detailed tradeoff considerations between equivariant architectures and data augmentation for practical problems.

Talks

HEAL-SWIN and equivariant non-linear maps #

Oscar Carlsson

In this talk I give a brief introduction to two of my papers ‘HEAL-SWIN: A Vision Transformer On The Sphere’ and ‘Equivariant non-linear maps for neural networks on homogeneous spaces’ and their main results.

Introduction to Machine Learning and Geometric Deep Learning #

Oscar Carlsson

In this talk I give a very high level introduction to machine learning and geometric deep learning. The intended audience is people with no previous knowledge of ML or GDL.

Differential Geometry in Equivariant CNNs via Biprincipal Bundles #

Oscar Carlsson

The standard convolutional layer in convolutional neural networks (CNNs) arises from a general linear map constrained by translation equivariance. This constraint leads to the classical weight-sharing property of the integration kernel. Interestingly, one can also derive this property from a purely differential geometry viewpoint. In this talk, I will present this alternative derivation for the weight-sharing constraint of the standard CNN and then extend this framework to a more general setting using biprincipal bundles. Finally, I will discuss how this differential geometry framework connects to established approaches for equivariant CNNs on homogeneous spaces.

HEAL-SWIN: A Vision Transformer On The Sphere #

Oscar Carlsson

High-resolution wide-angle fisheye images are becoming more and more important for robotics applications such as autonomous driving. However, using ordinary convolutional neural networks or vision transformers on this data is problematic due to projection and distortion losses introduced when projecting to a rectangular grid on the plane. We introduce the HEAL-SWIN transformer, which combines the highly uniform Hierarchical Equal Area iso-Latitude Pixelation (HEALPix) grid used in astrophysics and cosmology with the Hierarchical Shifted-Window (SWIN) transformer to yield an efficient and flexible model capable of training on high-resolution, distortion-free spherical data. In HEAL-SWIN, the nested structure of the HEALPix grid is used to perform the patching and windowing operations of the SWIN transformer, enabling the network to process spherical representations with minimal computational overhead. We demonstrate the superior performance of our model on both synthetic and real automotive datasets, as well as a selection of other image datasets, for semantic segmentation, depth regression and classification tasks.

HEAL-SWIN: A Vision Transformer On The Sphere #

Oscar Carlsson

High-resolution wide-angle images, such as fisheye images, are increasingly important in applications like robotics and autonomous driving. Traditional neural networks struggle with these images due to projection and distortion losses when operating on their flat projections. In this presentation, I will introduce the HEAL-SWIN model, which addresses this issue by combining the SWIN transformer with the Hierarchical Equal Area iso-Latitude Pixelation (HEALPix) grid from astrophysics. This integration enables the HEAL-SWIN model to process inherently spherical data without projections, effectively eliminating distortion losses.

A popular science introduction to geometric deep learning #

Oscar Carlsson

A popular science introduction to geometric deep learning for high school teachers. (Given in Swedish.)

Half-way seminar: Geometric deep learning for data on manifolds and spherical images #

Oscar Carlsson

Geometric Deep Learning (GDL) is a vast and rapidly advancing field. In this talk, I provide a brief introduction to GDL, some approaches and applications, along with a few examples. An essential aspect of GDL is how the model handles symmetries in data or spaces. For data defined on a manifold, one such symmetry is the choice of local coordinates and I will present our formulation of a convolutional layer which is equivariant to the choice of local coordinates (gauge equivariant) along with a brief overview of the required structures and concepts. Finally I will discuss our findings on the benefits and drawbacks of enforcing equivariance in the model compared to augmenting the training data. Based on our findings I am going to present some questions to consider when choosing the best approach for your models.