Geometry, Algebra and Physics in Deep Neural Networks

The research group on Geometry, Algebra and Physics in Deep Neural Networks (GAPinDNNs) is based at the Department for Mathematical Sciences at Chalmers University of Technology and the University of Gothenburg. Our vision is to develop a mathematical foundation for deep learning which elevates the field into a theoretically well-grounded science.

News

Elias in Boston

Our PhD student Elias will spend the spring in Boston on a WASP-funded research visit, working with Maurice Weiler at MIT and Robin Walters at Northeastern University on equivariant neural scaling laws.

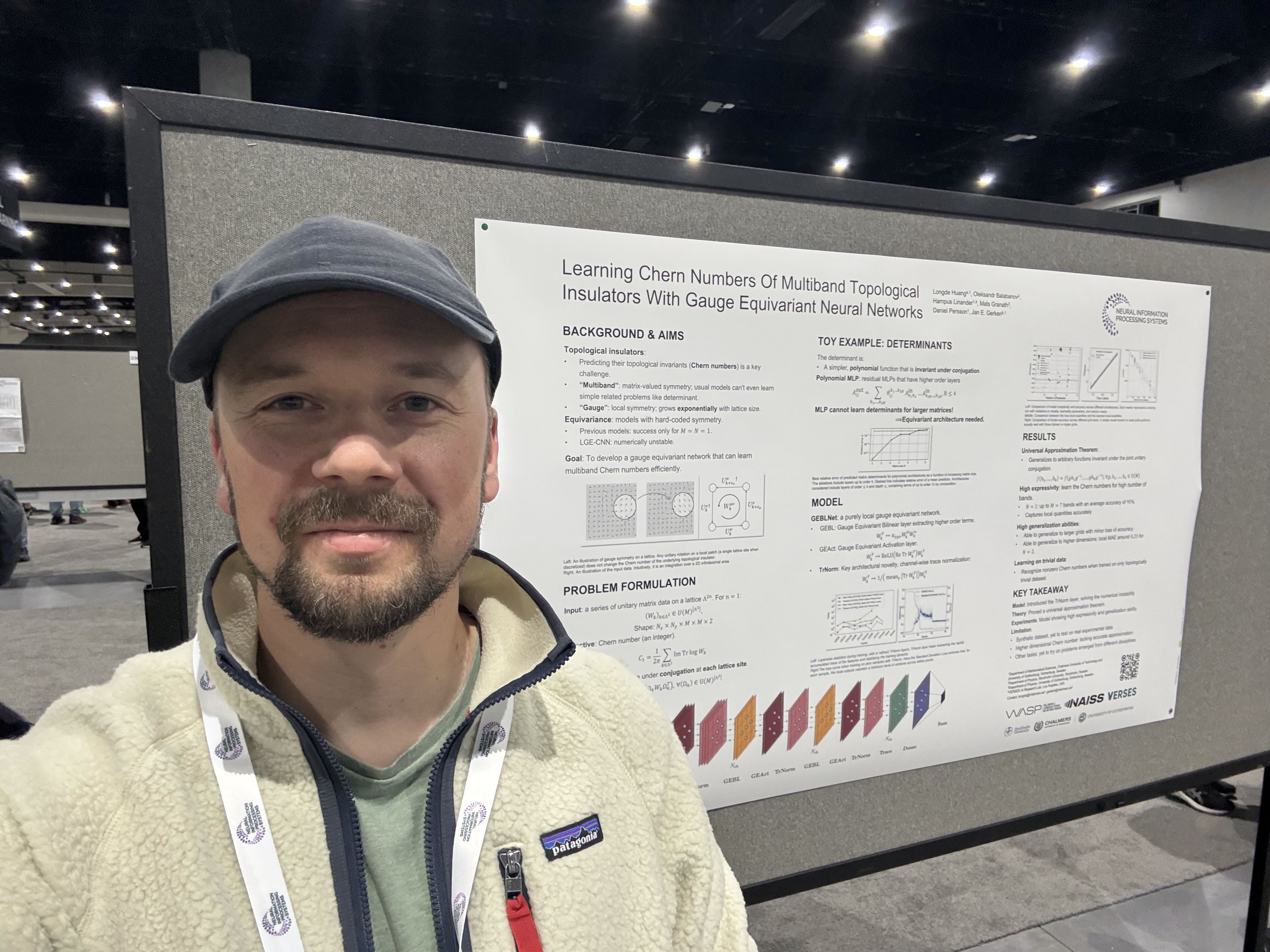

Poster session at NeurIPS 2025

Lots of interesting discussion at the poster session here on the first day of NeurIPS 2025 in San Diego! Hampus Linander presented our paper Learning Chern Numbers of Topological Insulators with Gauge Equivariant Neural Networks by Longde Huang, Oleksandr Balabanov, Hampus Linander, Mats Granath, Daniel Persson and Jan Gerken.

Paper accepted in AI4Science workshop at NeurIPS 2025

Our paper PEAR: Equal Area Weather Forecasting on the Sphere by Hampus Linander, Christoffer Petersson, Daniel Persson and Jan Gerken was accepted in the AI4Science workshop at NeurIPS 2025. In this paper we show how to do compute efficient global weather forecasting using the HEALPix grid.

Paper accepted in NeurIPS 2025

Our paper on Learning Chern Numbers of Topological Insulators with Gauge Equivariant Neural Networks has been accepted for a poster at NeurIPS 2025! In this paper, we combine lattice gauge equivariant networks with a novel training mechanism to learn topological invariants (Chern numbers) of topological insulators. This paper combines several beautiful topics in machine learning, physics and mathematics.

First author is our new PhD student Longde Huang. Congratulations to his first publication! From our group, Hampus Linander, Daniel Persson and Jan Gerken were also involved. Thanks to our phyiscs-collaborators Oleksandr Balabanov (then at Stockholm University) and Mats Granath (University of Gothenburg) for their expertise and a fun collaboration!

Philipp’s internship at Genentech

Today, Philipp is starting his 10-months internship at Genentech (Roche) in Switzerland. Under the supervision of Pan Kessel he will explore new ways of generative protein design. We wish him a successful start!

Welcome to our new group members Miaowen and Longde

We are happy to announce that our group welcomes two new PhD students: Miaowen Dong and Longde Huang. They will both work under the supervision of Jan. Their respective research areas can be found at their linked profiles.

A new PhD: Oscar Carlsson

We proudly announce that Oscar successfully defended his thesis “Geometry and Symmetry in Deep Learning: From Mathematical Foundations to Vision Applications” in front of his opponent Remco Duits and the committee consisting of Kathlén Kohn, Fredrik Kahl, and Jun Yu. The whole group wishes him all the best on his future career path!

Philipp completed his half-way seminar

At Chalmers it is customary for PhD students to summarize their research after roughly the first half of their PhD. Philipp was successfully presenting his progress in form of a half-way seminar talk.

Group excursion

With the Swedish summer about to end, we came together for a memorable group excursion. Thanks to the surprisingly good weather, the exploration of Gothenburg’s archipelago with kayaks was a pleasant and exciting experience. A few capsizes added to the to the adventure- and are part of the learning process. To cap off the day, Daniel treated us to an authentic Mexican-themed BBQ evening.

GDL Workshop in Umeå