Jan Gerken

Assistant Professor

About me

I lead a research group about mathematical foundations of AI, funded by the Wallenberg AI, Autonomous Systems and Software Program.

I have a background in string theory, in particular I computed string scattering amplitudes at genus one during my PhD. My current main research interests are:

- wide neural networks, neural tangent kernels and connections to quantum field theory

- mathematical aspects of geometric deep learning, ranging from the geometry of the data manifold to equivariant neural networks

- computer vision for spherical data

I am also interested in quantum chemistry and quantum computing.

Publications

Finite-Width Neural Tangent Kernels from Feynman Diagrams #

Max Guillen, Philipp Misof, Jan E. Gerken

Neural tangent kernels (NTKs) are a powerful tool for analyzing deep, non-linear neural networks. In the infinite-width limit, NTKs can easily be computed for most common architectures, yielding full analytic control over the training dynamics. However, at infinite width, important properties of training such as NTK evolution or feature learning are absent. Nevertheless, finite width effects can be included by computing corrections to the Gaussian statistics at infinite width. We introduce Feynman diagrams for computing finite-width corrections to NTK statistics. These dramatically simplify the necessary algebraic manipulations and enable the computation of layer-wise recursive relations for arbitrary statistics involving preactivations, NTKs and certain higher-derivative tensors (dNTK and ddNTK) required to predict the training dynamics at leading order. We demonstrate the feasibility of our framework by extending stability results for deep networks from preactivations to NTKs and proving the absence of finite-width corrections for scale-invariant nonlinearities such as ReLU on the diagonal of the Gram matrix of the NTK. We validate our results with numerical experiments.

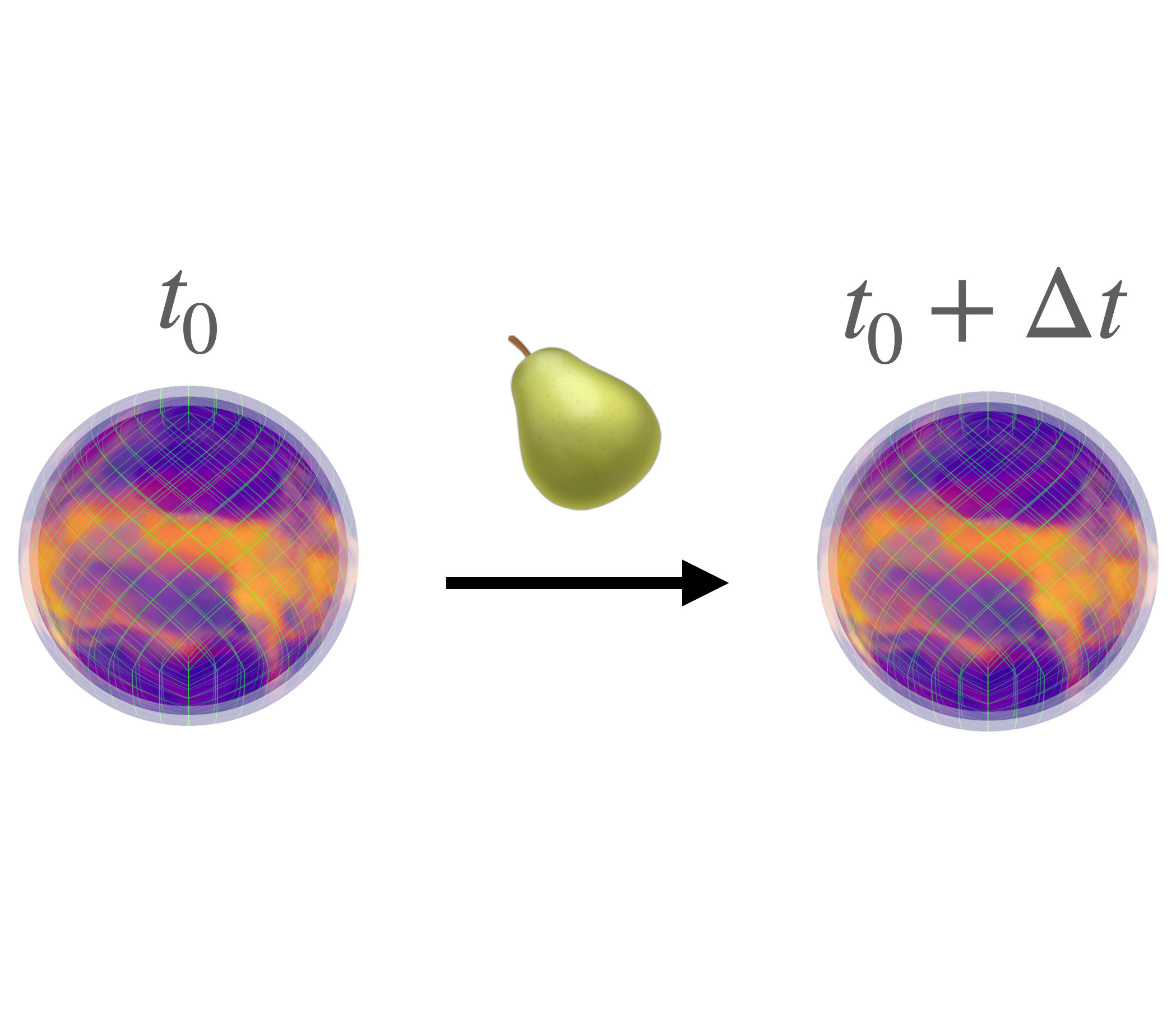

PEAR: Equal Area Weather Forecasting on the Sphere #

Hampus Linander, Christoffer Petersson, Daniel Persson, Jan E. Gerken

Machine learning methods for global medium-range weather forecasting have recently received immense attention. Following the publication of the Pangu Weather model, the first deep learning model to outperform traditional numerical simulations of the atmosphere, numerous models have been published in this domain, building on Pangu’s success. However, all of these models operate on input data and produce predictions on the Driscoll–Healy discretization of the sphere which suffers from a much finer grid at the poles than around the equator. In contrast, in the Hierarchical Equal Area iso-Latitude Pixelization (HEALPix) of the sphere, each pixel covers the same surface area, removing unphysical biases. Motivated by a growing support for this grid in meteorology and climate sciences, we propose to perform weather forecasting with deep learning models which natively operate on the HEALPix grid. To this end, we introduce Pangu Equal ARea (PEAR), a transformer-based weather forecasting model which operates directly on HEALPix-features and outperforms the corresponding model on Driscoll–Healy without any computational overhead.

Equivariant Neural Tangent Kernels #

Philipp Misof, Pan Kessel, Jan E. Gerken

Equivariant neural networks have in recent years become an important technique for guiding architecture selection for neural networks with many applications in domains ranging from medical image analysis to quantum chemistry. In particular, as the most general linear equivariant layers with respect to the regular representation, group convolutions have been highly impactful in numerous applications. Although equivariant architectures have been studied extensively, much less is known about the training dynamics of equivariant neural networks. Concurrently, neural tangent kernels (NTKs) have emerged as a powerful tool to analytically understand the training dynamics of wide neural networks. In this work, we combine these two fields for the first time by giving explicit expressions for NTKs of group convolutional neural networks. In numerical experiments, we demonstrate superior performance for equivariant NTKs over non-equivariant NTKs on a classification task for medical images.

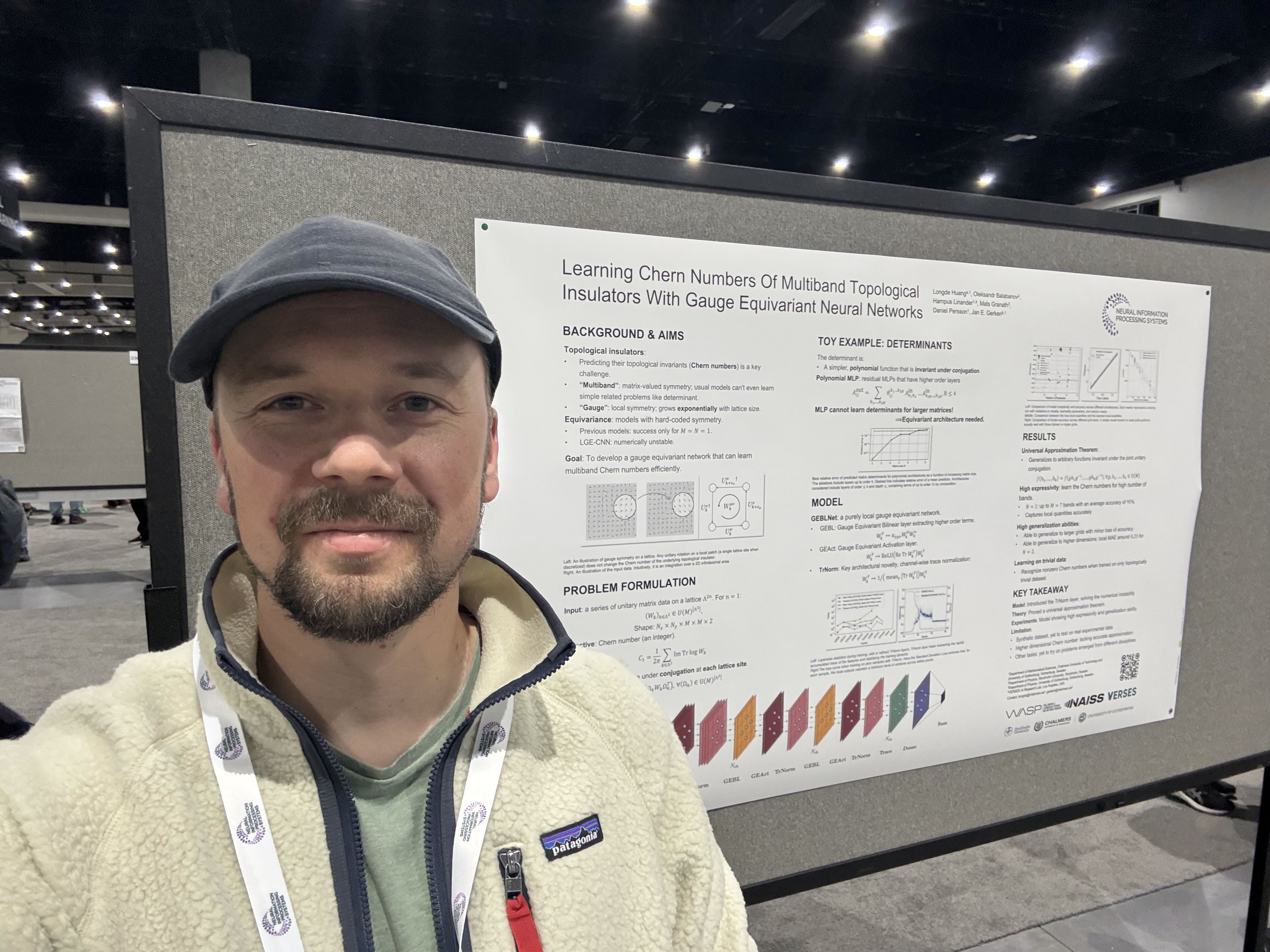

Learning Chern Numbers of Topological Insulators with Gauge Equivariant Neural Networks #

Longde Huang, Oleksandr Balabanov, Hampus Linander, Mats Granath, Daniel Persson, Jan E. Gerken

Equivariant network architectures are a well-established tool for predicting invariant or equivariant quantities. However, almost all learning problems considered in this context feature a global symmetry, i.e. each point of the underlying space is transformed with the same group element, as opposed to a local gauge symmetry, where each point is transformed with a different group element, exponentially enlarging the size of the symmetry group. Gauge equivariant networks have so far mainly been applied to problems in quantum chromodynamics. Here, we introduce a novel application domain for gauge-equivariant networks in the theory of topological condensed matter physics. We use gauge equivariant networks to predict topological invariants (Chern numbers) of multiband topological insulators. The gauge symmetry of the network guarantees that the predicted quantity is a topological invariant. We introduce a novel gauge equivariant normalization layer to stabilize the training and prove a universal approximation theorem for our setup. We train on samples with trivial Chern number only but show that our models generalize to samples with non-trivial Chern number. We provide various ablations of our setup. Our code is available at this https URL.

Emergent Equivariance in Deep Ensembles #

Jan E. Gerken, Pan Kessel

We demonstrate that deep ensembles are secretly equivariant models. More precisely, we show that deep ensembles become equivariant for all inputs and at all training times by simply using data augmentation. Crucially, equivariance holds off-manifold and for any architecture in the infinite width limit. The equivariance is emergent in the sense that predictions of individual ensemble members are not equivariant but their collective prediction is. Neural tangent kernel theory is used to derive this result and we verify our theoretical insights using detailed numerical experiments.

Geometric deep learning and equivariant neural networks #

Jan E. Gerken, Jimmy Aronsson, Oscar Carlsson, Hampus Linander, Fredrik Ohlsson, Christoffer Petersson, Daniel Persson

We survey the mathematical foundations of geometric deep learning, focusing on group equivariant and gauge equivariant neural networks. We develop gauge equivariant convolutional neural networks on arbitrary manifolds \(\mathcal{M}\) using principal bundles with structure group K and equivariant maps between sections of associated vector bundles. We also discuss group equivariant neural networks for homogeneous spaces \(\mathcal {M}=G/K\), which are instead equivariant with respect to the global symmetry \(G\) on \(\mathcal {M}\). Group equivariant layers can be interpreted as intertwiners between induced representations of \(G\), and we show their relation to gauge equivariant convolutional layers. We analyze several applications of this formalism, including semantic segmentation and object detection networks. We also discuss the case of spherical networks in great detail, corresponding to the case \(\mathcal {M}=S^2=\textrm{SO}(3)/\textrm{SO}(2)\). Here we emphasize the use of Fourier analysis involving Wigner matrices, spherical harmonics and Clebsch-Gordan coefficients for \(G=\textrm{SO}(3)\), illustrating the power of representation theory for deep learning.

HEAL-SWIN: A Vision Transformer On The Sphere #

Oscar Carlsson, Jan E. Gerken, Hampus Linander, Heiner Spieß, Fredrik Ohlsson, Christoffer Petersson, Daniel Persson

High-resolution wide-angle fisheye images are becoming more and more important for robotics applications such as autonomous driving. However, using ordinary convolutional neural networks or vision transformers on this data is problematic due to projection and distortion losses introduced when projecting to a rectangular grid on the plane. We introduce the HEAL-SWIN transformer, which combines the highly uniform Hierarchical Equal Area iso-Latitude Pixelation (HEALPix) grid used in astrophysics and cosmology with the Hierarchical Shifted-Window (SWIN) transformer to yield an efficient and flexible model capable of training on high-resolution, distortion-free spherical data. In HEAL-SWIN, the nested structure of the HEALPix grid is used to perform the patching and windowing operations of the SWIN transformer, resulting in a one-dimensional representation of the spherical data with minimal computational overhead. We demonstrate the superior performance of our model for semantic segmentation and depth regression tasks on both synthetic and real automotive datasets. Our code is available at https://github.com/JanEGerken/HEAL-SWIN.

Towards closed strings as single-valued open strings at genus one #

Jan E. Gerken, Axel Kleinschmidt, Carlos R. Mafra, Oliver Schlotterer, Bram Verbeek

We relate the low-energy expansions of world-sheet integrals in genus-one amplitudes of open- and closed-string states. The respective expansion coefficients are elliptic multiple zeta values in the open-string case and non-holomorphic modular forms dubbed “modular graph forms” for closed strings. By inspecting the differential equations and degeneration limits of suitable generating series of genus-one integrals, we identify formal substitution rules mapping the elliptic multiple zeta values of open strings to the modular graph forms of closed strings. Based on the properties of these rules, we refer to them as an elliptic single-valued map which generalizes the genus-zero notion of a single-valued map acting on multiple zeta values seen in tree-level relations between the open and closed string.

Equivariance versus Augmentation for Spherical Images #

Jan E. Gerken, Oscar Carlsson, Hampus Linander, Fredrik Ohlsson, Christoffer Petersson, Daniel Persson

We analyze the role of rotational equivariance in convolutional neural networks (CNNs) applied to spherical images. We compare the performance of the group equivariant networks known as S2CNNs and standard non-equivariant CNNs trained with an increasing amount of data augmentation. The chosen architectures can be considered baseline references for the respective design paradigms. Our models are trained and evaluated on single or multiple items from the MNIST- or FashionMNIST dataset projected onto the sphere. For the task of image classification, which is inherently rotationally invariant, we find that by considerably increasing the amount of data augmentation and the size of the networks, it is possible for the standard CNNs to reach at least the same performance as the equivariant network. In contrast, for the inherently equivariant task of semantic segmentation, the non-equivariant networks are consistently outperformed by the equivariant networks with significantly fewer parameters. We also analyze and compare the inference latency and training times of the different networks, enabling detailed tradeoff considerations between equivariant architectures and data augmentation for practical problems.

Diffeomorphic Counterfactuals With Generative Models #

Ann-Kathrin Dombrowski, Jan E. Gerken, Klaus-Robert Müller, Pan Kessel

Counterfactuals can explain classification decisions of neural networks in a human interpretable way. We propose a simple but effective method to generate such counterfactuals. More specifically, we perform a suitable diffeomorphic coordinate transformation and then perform gradient ascent in these coordinates to find counterfactuals which are classified with great confidence as a specified target class. We propose two methods to leverage generative models to construct such suitable coordinate systems that are either exactly or approximately diffeomorphic. We analyze the generation process theoretically using Riemannian differential geometry and validate the quality of the generated counterfactuals using various qualitative and quantitative measures.

Modular Graph Forms and Scattering Amplitudes in String Theory #

Jan E. Gerken

In this thesis, we investigate the low-energy expansion of scattering amplitudes of closed strings at one-loop level (i.e. at genus one) in a ten-dimensional Minkowski background using a special class of functions called modular graph forms. These allow for a systematic evaluation of the low-energy expansion and satisfy many non-trivial algebraic and differential relations. We study these relations in detail, leading to basis decompositions for a large number of modular graph forms which greatly reduce the complexity of the expansions of the integrals appearing in the amplitude. One of the results of this thesis is a Mathematica package which automatizes these simplifications. We use these techniques to compute the leading low-energy orders of the scattering amplitude of four gluons in the heterotic string at one-loop level.

Furthermore, we study a generating function which conjecturally contains the torus integrals of all perturbative closed-string theories. We write this generating function in terms of iterated integrals of holomorphic Eisenstein series and use this approach to arrive at a more rigorous characterization of the space of modular graph forms than was possible before. For tree-level string amplitudes, the single-valued map of multiple zeta values maps open-string amplitudes to closed-string amplitudes. The definition of a suitable one-loop generalization, a so-called elliptic single-valued map, is an active area of research and we provide a new perspective on this topic using our generating function of torus integrals.

Generating series of all modular graph forms from iterated Eisenstein integrals #

Jan E. Gerken, Axel Kleinschmidt, Oliver Schlotterer

We study generating series of torus integrals that contain all so-called modular graph forms relevant for massless one-loop closed-string amplitudes. By analysing the differential equation of the generating series we construct a solution for their low-energy expansion to all orders in the inverse string tension \u03B1 \u2032. Our solution is expressed through initial data involving multiple zeta values and certain real-analytic functions of the modular parameter of the torus. These functions are built from real and imaginary parts of holomorphic iterated Eisenstein integrals and should be closely related to Brown\u2019s recent construction of real-analytic modular forms. We study the properties of our real-analytic objects in detail and give explicit examples to a fixed order in the \u03B1\u2032-expansion. In particular, our solution allows for a counting of linearly independent modular graph forms at a given weight, confirming previous partial results and giving predictions for higher, hitherto unexplored weights. It also sheds new light on the topic of uniform transcendentality of the \u03B1\u2032-expansion.

Basis Decompositions and a Mathematica Package for Modular Graph Forms #

Jan E. Gerken

Modular graph forms (MGFs) are a class of non-holomorphic modular forms which naturally appear in the low-energy expansion of closed-string genus-one amplitudes and have generated considerable interest from pure mathematicians. MGFs satisfy numerous non-trivial algebraic- and differential relations which have been studied extensively in the literature and lead to significant simplifications. In this paper, we systematically combine these relations to obtain basis decompositions of all two- and three-point MGFs of total modular weight \(w+\bar{w}\leq12\), starting from just two well-known identities for banana graphs. Furthermore, we study previously known relations in the integral representation of MGFs, leading to a new understanding of holomorphic subgraph reduction as Fay identities of Kronecker–Eisenstein series and opening the door towards decomposing divergent graphs. We provide a computer implementation for the manipulation of MGFs in the form of the Mathematica package ModularGraphForms which includes the basis decompositions obtained.

All-order differential equations for one-loop closed-string integrals and modular graph forms #

Jan E. Gerken, Axel Kleinschmidt, Oliver Schlotterer

We investigate generating functions for the integrals over world-sheet tori appearing in closed-string one-loop amplitudes of bosonic, heterotic and type-II theories. These closed-string integrals are shown to obey homogeneous and linear differential equations in the modular parameter of the torus. We spell out the first-order Cauchy-Riemann and second-order Laplace equations for the generating functions for any number of external states. The low-energy expansion of such torus integrals introduces infinite families of non-holomorphic modular forms known as modular graph forms. Our results generate homogeneous first- and second-order differential equations for arbitrary such modular graph forms and can be viewed as a step towards all-order low-energy expansions of closed-string integrals.

Heterotic-string amplitudes at one loop: modular graph forms and relations to open strings #

Jan E. Gerken, Axel Kleinschmidt, Oliver Schlotterer

We investigate one-loop four-point scattering of non-abelian gauge bosons in heterotic string theory and identify new connections with the corresponding open-string amplitude. In the low-energy expansion of the heterotic-string amplitude, the integrals over torus punctures are systematically evaluated in terms of modular graph forms, certain non-holomorphic modular forms. For a specific torus integral, the modular graph forms in the low-energy expansion are related to the elliptic multiple zeta values from the analogous open-string integrations over cylinder boundaries. The detailed correspondence between these modular graph forms and elliptic multiple zeta values supports a recent proposal for an elliptic generalization of the single-valued map at genus zero.

Holomorphic subgraph reduction of higher-point modular graph forms #

Jan E. Gerken, Justin Kaidi

Modular graph forms are a class of modular covariant functions which appear in the genus-one contribution to the low-energy expansion of closed string scattering amplitudes. Modular graph forms with holomorphic subgraphs enjoy the simplifying property that they may be reduced to sums of products of modular graph forms of strictly lower loop order. In the particular case of dihedral modular graph forms, a closed form expression for this holomorphic subgraph reduction was obtained previously by D’Hoker and Green. In the current work, we extend these results to trihedral modular graph forms. Doing so involves the identification of a modular covariant regularization scheme for certain conditionally convergent sums over discrete momenta, with some elements of the sum being excluded. The appropriate regularization scheme is identified for any number of exclusions, which in principle allows one to perform holomorphic subgraph reduction of higher-point modular graph forms with arbitrary holomorphic subgraphs.

Talks

Neural Tangent Kernels: Data augmentation and Feynman diagrams #

Jan E. Gerken

In this talk, I will discuss how neural tangent kernels (NTKs) can be used to understand the symmetry properties of deep ensembles trained with data augmentation. In the infinite-width limit, we prove that such ensembles are equivariant at any training step, even off-manifold, and that the predictor becomes equivalent to a group convolutional neural network. This equivariance is emergent; individual ensemble members are not equivariant, but their collective prediction is. I will prove this theoretically using NTK theory and verify our insights with numerical experiments.

I will also discuss recent work on going beyond the infinite-width limit using Feynman diagrams. While infinite-width NTKs are analytically tractable, they miss important phenomena like NTK evolution and feature learning. I introduce a diagrammatic approach for computing finite-width corrections that dramatically simplifies the necessary calculations and enables calculation of neural network statistics at finite width.

Structure and Dynamics of Deep Neural Networks: A Perspective from Geometry and Physics #

Jan E. Gerken

In this lecture, I will give a broad overview of my research over the past several years and outline how the different threads fit together into a coherent agenda.

Modern neural networks are extraordinarily powerful, yet they implement highly nonlinear functions whose mathematical structure remains difficult to analyze. One way to make progress is to adopt a geometric perspective. When the underlying data distribution exhibits symmetries, we can incorporate these directly into network architectures via equivariance, leading to the framework of geometric deep learning. This approach has seen significant empirical success, but it also raises an important question: should symmetries be built into the model, or can they be learned from data?

Addressing this question requires a theoretical understanding of learning dynamics. I will discuss our recent progress on this problem in the limit of infinitely wide networks, where the training dynamics simplify and become analytically tractable. Building on tools inspired by Feynman diagrams in theoretical physics, we have further developed a diagrammatic method for computing corrections beyond this infinite-width limit. Together, these results provide a unified view of how geometry and physics can illuminate the behavior of deep neural networks.”

Equivariant Neural Networks: From Training Dynamics to Topology #

Jan E. Gerken

Emergent Equivariance in Deep Ensembles #

Jan E. Gerken

In this talk, I will discuss recent results on the symmetry properties of deep ensembles trained with data augmentation. Specifically, we show that the ensemble is equivariant at any training step, provided that data augmentation is used. Crucially, this equivariance also holds off-manifold and therefore goes beyond the intuition that data augmentation leads to approximately equivariant predictions. Furthermore, equivariance is emergent in the sense that predictions of individual ensemble members are not equivariant but their collective prediction is. Therefore, the deep ensemble is indistinguishable from a manifestly equivariant predictor. In the infinite width limit, this predictor is in fact a group convolutional neural network. We prove this theoretically using neural tangent kernel theory and verify our theoretical insights using detailed numerical experiments. Based on joint work with Pan Kessel and Philipp Misof.

Emergent Equivariance in Deep Ensembles #

Jan E. Gerken

In this talk, I will discuss recent results on the symmetry properties of deep ensembles trained with data augmentation. Specifically, we show that the ensemble is equivariant at any training step, provided that data augmentation is used. Crucially, this equivariance also holds off-manifold and therefore goes beyond the intuition that data augmentation leads to approximately equivariant predictions. Furthermore, equivariance is emergent in the sense that predictions of individual ensemble members are not equivariant but their collective prediction is. Therefore, the deep ensemble is indistinguishable from a manifestly equivariant predictor. In the infinite width limit, this predictor is in fact a group convolutional neural network. We prove this theoretically using neural tangent kernel theory and verify our theoretical insights using detailed numerical experiments. Based on joint work with Pan Kessel and Philipp Misof: arXiv:2403.03103 and arXiv:2406.06504.

Emergent Equivariance in Deep Ensembles #

Jan E. Gerken

In this talk, I will discuss recent results on the symmetry properties of deep ensembles trained with data augmentation. Specifically, we show that the ensemble is equivariant at any training step, provided that data augmentation is used. Crucially, this equivariance also holds off-manifold and therefore goes beyond the intuition that data augmentation leads to approximately equivariant predictions. Furthermore, equivariance is emergent in the sense that predictions of individual ensemble members are not equivariant but their collective prediction is. Therefore, the deep ensemble is indistinguishable from a manifestly equivariant predictor. In the infinite width limit, this predictor is in fact a group convolutional neural network. We prove this theoretically using neural tangent kernel theory and verify our theoretical insights using detailed numerical experiments. Based on joint work with Pan Kessel and Philipp Misof: arXiv:2403.03103 and arXiv:2406.06504.

Emergent Equivariance in Deep Ensembles #

Jan E. Gerken

In this talk, I will discuss recent results on the symmetry properties of deep ensembles trained with data augmentation. Specifically, we show that the ensemble is equivariant at any training step, provided that data augmentation is used. Crucially, this equivariance also holds off-manifold and therefore goes beyond the intuition that data augmentation leads to approximately equivariant predictions. Furthermore, equivariance is emergent in the sense that predictions of individual ensemble members are not equivariant but their collective prediction is. Therefore, the deep ensemble is indistinguishable from a manifestly equivariant predictor. In the infinite width limit, this predictor is in fact a group convolutional neural network. We prove this theoretically using neural tangent kernel theory and verify our theoretical insights using detailed numerical experiments. Based on joint work with Pan Kessel and Philipp Misof: http://arxiv.org/abs/2403.03103 and http://arxiv.org/abs/2406.06504

Emergent Equivariance in Deep Ensembles #

Jan E. Gerken

Symmetries in AI4Science #

Jan E. Gerken

Symmetries are of fundamental importance in all of science and therefore critical for the success of deep learning systems used in this domain. In this talk, I will give an overview of the different forms in which symmetries appear in physics and chemistry and explain the theoretical background behind equivariant neural networks. Then, I will discuss common ways of constructing equivariant networks in different settings and contrast manifestly equivariant networks with other techniques for reaching equivariant models. Finally, I will report on recent results about the symmetry properties of deep ensembles trained with data augmentation.

Geometric Deep Learning and Neural Tangent Kernels #

Jan E. Gerken

Symmetries and Neural Tangent Kernels #

Jan E. Gerken

Emergent Equivariance in Deep Ensembles #

Jan E. Gerken

We demonstrate that a generic deep ensemble is emergently equivariant under data augmentation in the large width limit. Specifically, the ensemble is equivariant at any training step, provided that data augmentation is used. Crucially, this equivariance also holds off-manifold and therefore goes beyond the intuition that data augmentation leads to approximately equivariant predictions. Furthermore, equivariance is emergent in the sense that predictions of individual ensemble members are not equivariant but their collective prediction is. Therefore, the deep ensemble is indistinguishable from a manifestly equivariant predictor. We prove this theoretically using neural tangent kernel theory and verify our theoretical insights using detailed numerical experiments. Based on joint work with Pan Kessel.

Emergent Equivariance in Deep Ensembles #

Jan E. Gerken

Emergent Equivariance in Deep Ensembles #

Jan E. Gerken

We demonstrate that a generic deep ensemble is emergently equivariant under data augmentation in the large width limit. Specifically, the ensemble is equivariant at any training step, provided that data augmentation is used. Crucially, this equivariance also holds off-manifold and therefore goes beyond the intuition that data augmentation leads to approximately equivariant predictions. Furthermore, equivariance is emergent in the sense that predictions of individual ensemble members are not equivariant but their collective prediction is. Therefore, the deep ensemble is indistinguishable from a manifestly equivariant predictor. We prove this theoretically using neural tangent kernel theory and verify our theoretical insights using detailed numerical experiments. Based on joint work with Pan Kessel.

Diffeomorphic Counterfactuals and Generative Models #

Jan E. Gerken

Counterfactuals can explain classification decisions of neural networks in a human interpretable way. We propose a simple but effective method to generate such counterfactuals. More specifically, we perform a suitable diffeomorphic coordinate transformation and then perform gradient ascent in these coordinates to find counterfactuals which are classified with great confidence as a specified target class. We propose two methods to leverage generative models to construct such suitable coordinate systems that are either exactly or approximately diffeomorphic. We analyze the generation process theoretically using Riemannian differential geometry and validate the quality of the generated counterfactuals using various qualitative and quantitative measures. Related paper : https://arxiv.org/abs/2206.05075

Diffeomorphic Counterfactuals and Generative Models #

Jan E. Gerken

Neural network classifiers are black box models which lack inherent interpretability. In many practical applications like medical imaging or autonomous driving, interpretations of the network decisions are needed which are provided by the field of explainable AI.Counterfactuals provide intuitive explanations for neural network classifiers which help to identify reliance on spurious features and biases in the underlying dataset. In this talk, I will introduce Diffeomorphic Counterfactuals, a simple but effective method to generate counterfactuals. Diffeomorphic Counterfactuals are generated by performing a suitable coordinate transformation of the data space using a generative model and then gradient ascent in the new coordinates. I will present a theoretical analysis of the generation process using differential geometry and show experimental results which validate the quality of the generated counterfactuals using various qualitative and quantitative measures.

Diffeomorphic Counterfactuals #

Jan E. Gerken

Geometric Deep Learning: From Pure Math to Applications #

Jan E. Gerken

Geometric Deep Learning: From Pure Math to Applications #

Jan E. Gerken

Despite its remarkable success, deep learning is lacking a strong theoretical foundation. One way to help alleviate this problem is to use ideas from differential geometry and group theory at various points in the learning process to arrive at a more principled approach of setting up the learning process. This approach goes by the name of geometric deep learning and has received a lot of attention in recent years. In this talk, I will summarize our work on some aspects of geometric deep learning, namely using group theory to guide the construction of neural network architectures and using the manifold structure of the input data to generate counterfactual explanations for neural networks motivated from differential geometry.

Equivariance versus Augmentation for Spherical Images #

Jan E. Gerken

We analyze the role of rotational equivariance in convolutional neural networks (CNNs) applied to spherical images. We compare the performance of the group equivariant networks known as S2CNNs and standard non-equivariant CNNs trained with an increasing amount of data augmentation. The chosen architectures can be considered baseline references for the respective design paradigms. Our models are trained and evaluated on single or multiple items from the MNIST- or FashionMNIST dataset projected onto the sphere. For the task of image classification, which is inherently rotationally invariant, we find that by considerably increasing the amount of data augmentation and the size of the networks, it is possible for the standard CNNs to reach at least the same performance as the equivariant network. In contrast, for the inherently equivariant task of semantic segmentation, the non-equivariant networks are consistently outperformed by the equivariant networks with significantly fewer parameters. We also analyze and compare the inference latency and training times of the different networks, enabling detailed tradeoff considerations between equivariant architectures and data augmentation for practical problems.

Diffeomorphic Counterfactuals and Generative Models #

Jan E. Gerken

Neural network classifiers are black box models which lack inherent interpretability. In many practical applications like medical imaging or autonomous driving, interpretations of the network decisions are needed which are provided by the field of explainable AI. Counterfactuals provide intuitive explanations for neural network classifiers which help to identify reliance on spurious features and biases in the underlying dataset. In this talk, I will introduce Diffeomorphic Counterfactuals, a simple but effective method to generate counterfactuals. Diffeomorphic Counterfactuals are generated by performing a suitable coordinate transformation of the data space using a generative model and then gradient ascent in the new coordinates. I will present a theoretical analysis of the generation process using differential geometry and show experimental results which validate the quality of the generated counterfactuals using various qualitative and quantitative measures.

Geometric Deep Learning #

Jan E. Gerken

The field of geometric deep learning has gained a lot of momentum in recent years and attracted people with different backgrounds such as deep learning, theoretical physics and mathematics. This is also reflected by the considerable research activity in this direction at our department. In this talk, I will give an introduction into neural networks and deeplearning and mention the different branches of mathematics relevant to their study. Then, I will focus more specifically on the subject of geometric deep learning where symmetries in the underlying data are used to guide the construction of network architectures. This opens the door for mathematical tools such as representation theory and differential geometry to be used in deep learning, leading to interesting new results. I will also comment on how the cross-fertilization between machine learning and mathematics has recently benefited (pure) mathematics.

Diffeomorphic Explanations with Normalizing Flows #

Jan E. Gerken

Diffeomorphic Explanations with Normalizing Flows #

Jan E. Gerken

Normalizing flows are diffeomorphisms which are parameterized by neural networks. As a result, they can induce coordinate transformations in the tangent space of the data manifold. In this work, we demonstrate that such transformations can be used to generate interpretable explanations for de- cisions of neural networks. More specifically, we perform gradient ascent in the base space of the flow to generate counterfactuals which are clas- sified with great confidence as a specified target class. We analyze this generation process theo- retically using Riemannian differential geometry and establish a rigorous theoretical connection be- tween gradient ascent on the data manifold and in the base space of the flow.